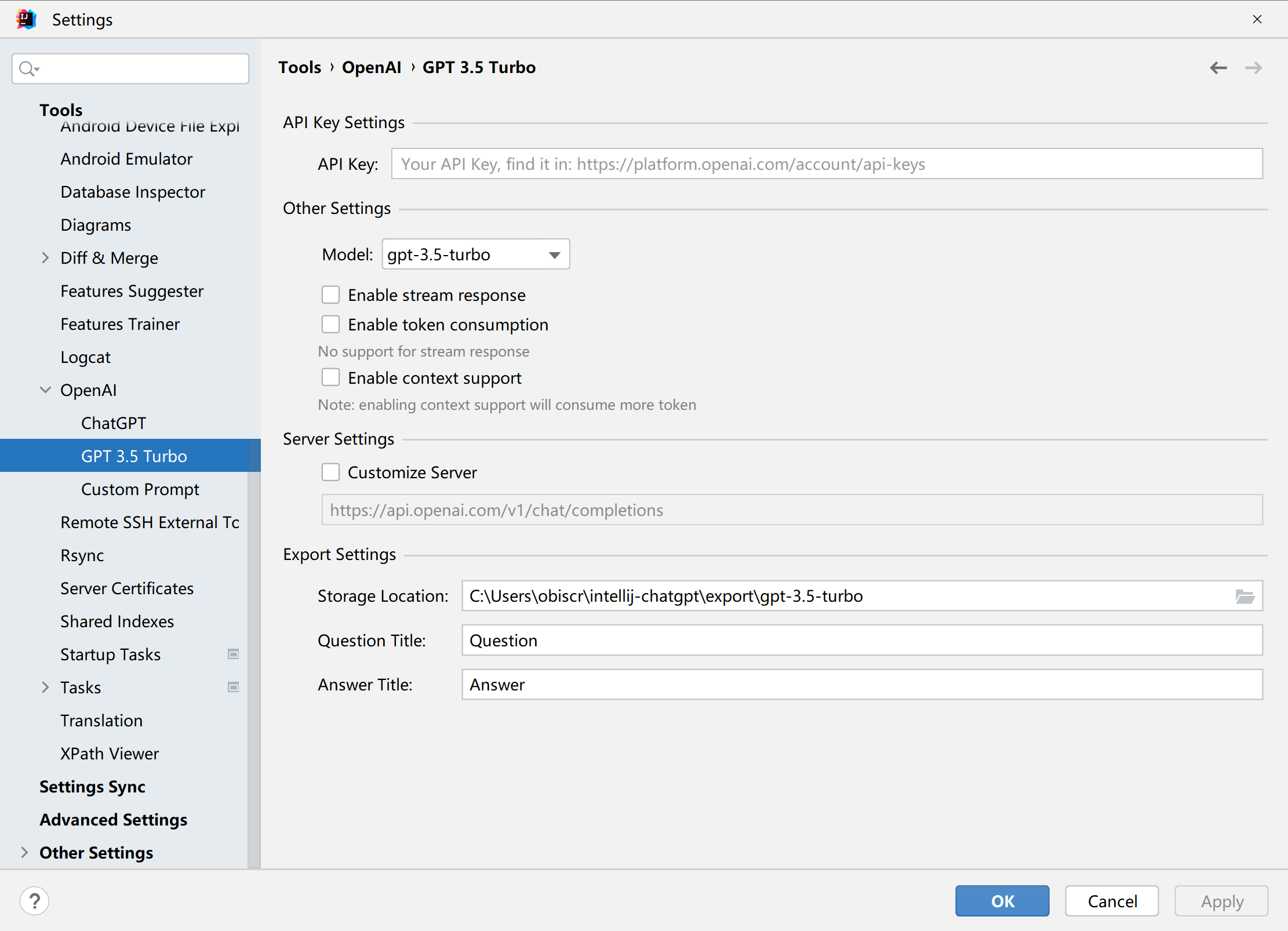

GPT 3.5 Turbo settings¶

API Key Setting¶

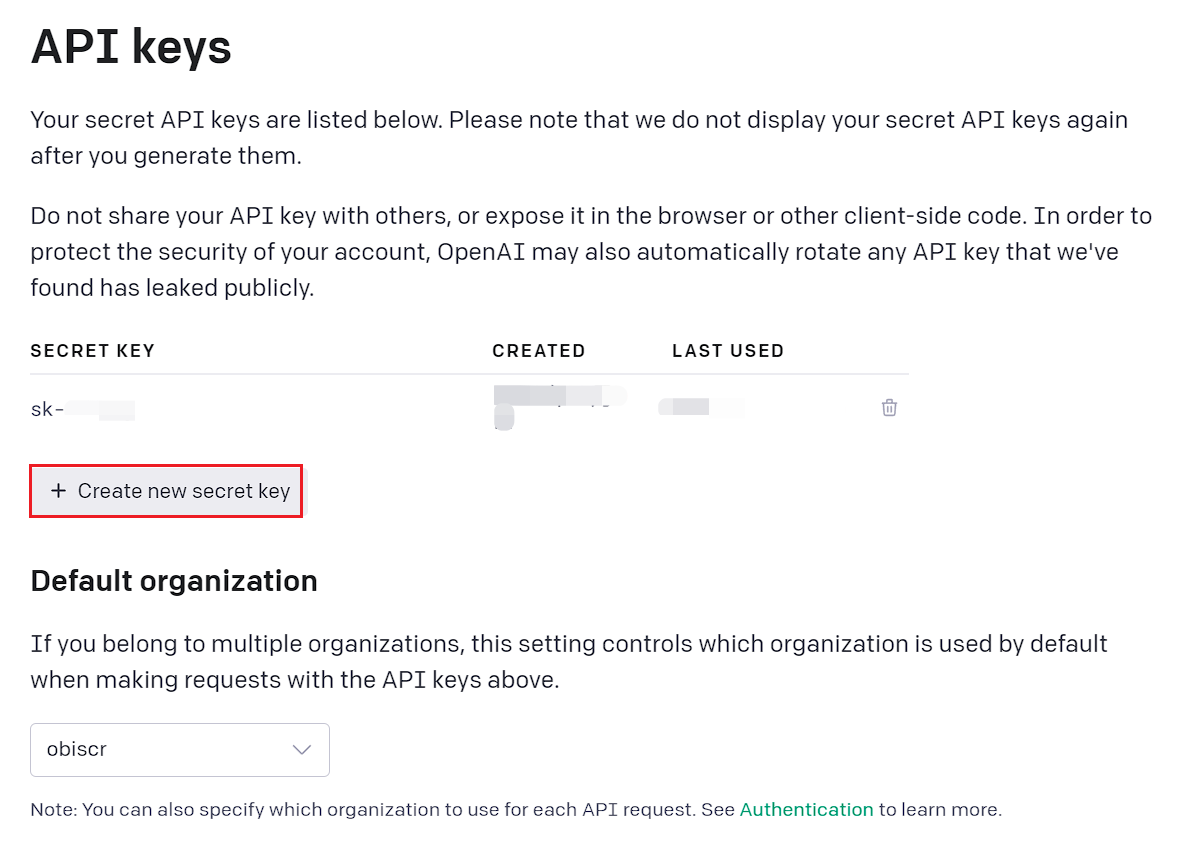

Get Api Key¶

First of all, you need to register an OpenAI account, (Note: this is required). Then open the official website and login first for this step. After you finish logging in, go to website again just now

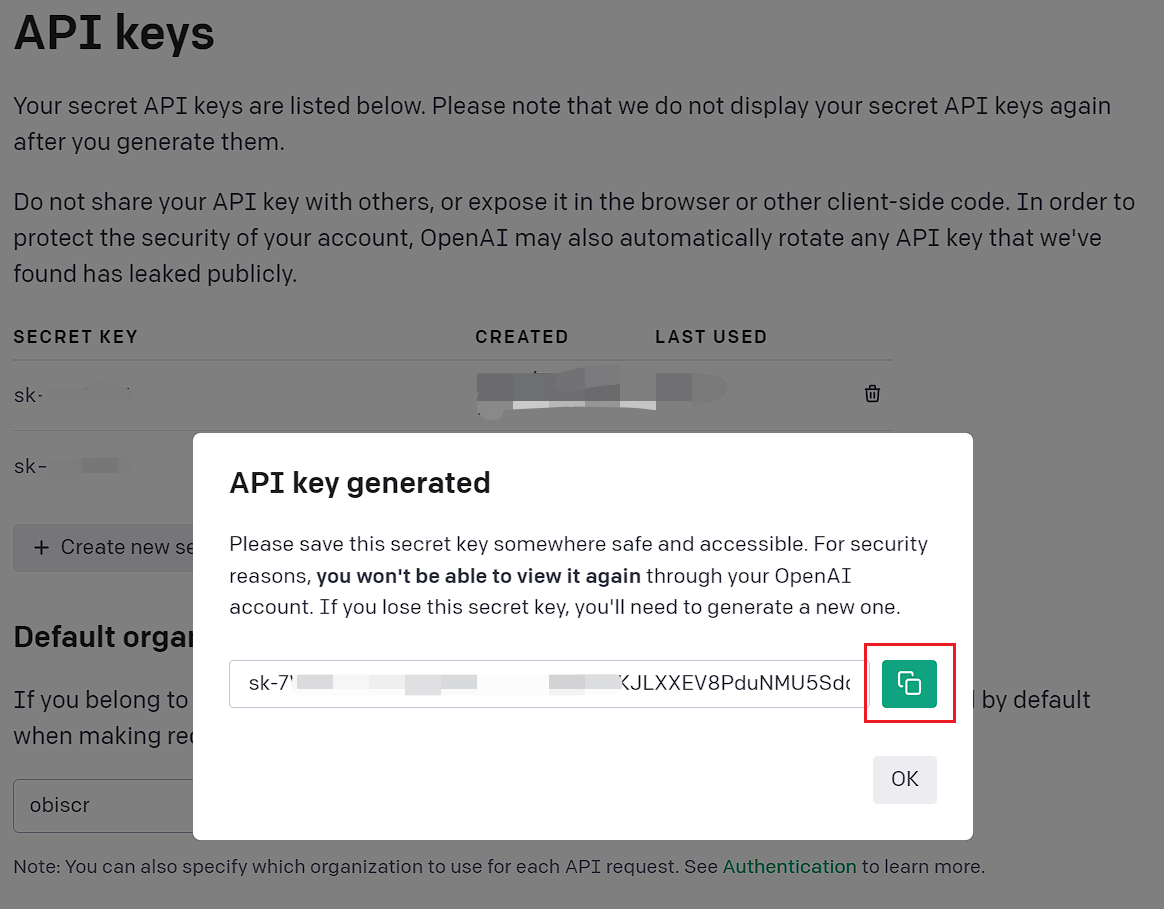

Click Create new secret key, the secret key will be generated as shown below, click the Copy button on the right.

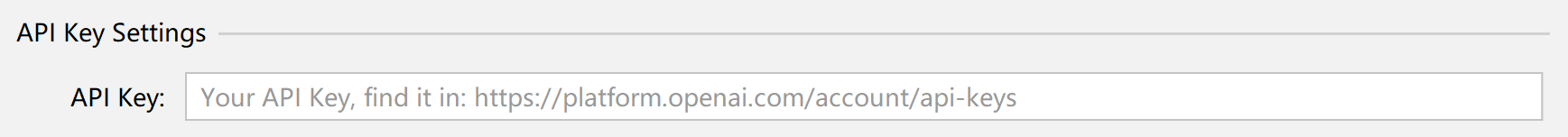

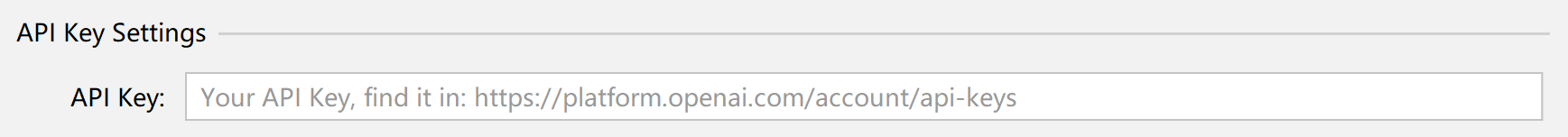

Configure into the plugin¶

Just paste the copied content into the location of the official source API Key set by IDE ChatGPT.

Other settings¶

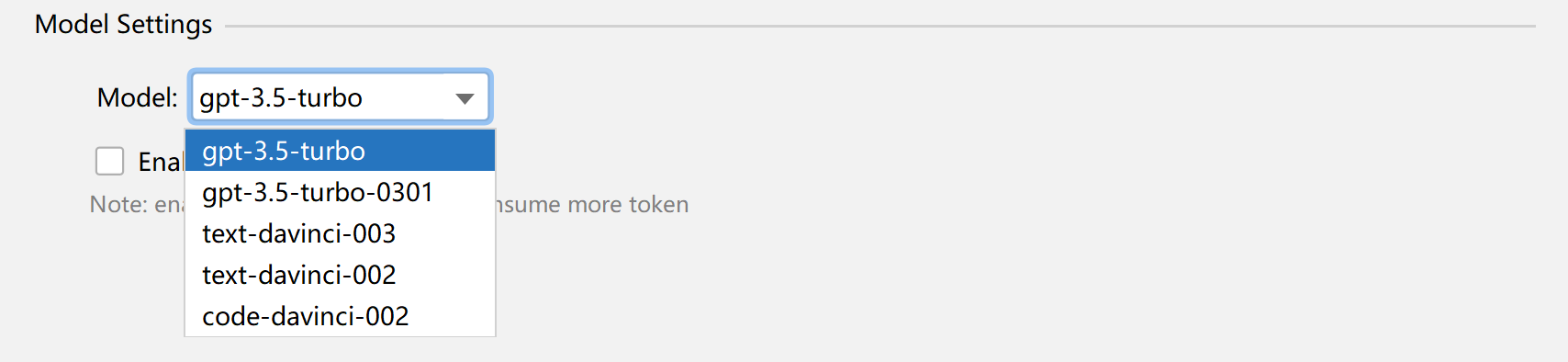

Dialogue model¶

For GPT 3.5 Turbo, a total of 5 models are supported:

- gpt-3.5-turbo (default model)

- gpt-3.5-turbo-0301

- text-davinci-003

- text-davinci-002

- code-davinci-002

You can choose the corresponding model according to your needs.

Enable streaming return¶

No need to wait long, better experience.

Enable Token statistics¶

Show the Token consumption of the trumpet after each conversation is finished.

Caution

This feature will not work if streaming return is on.

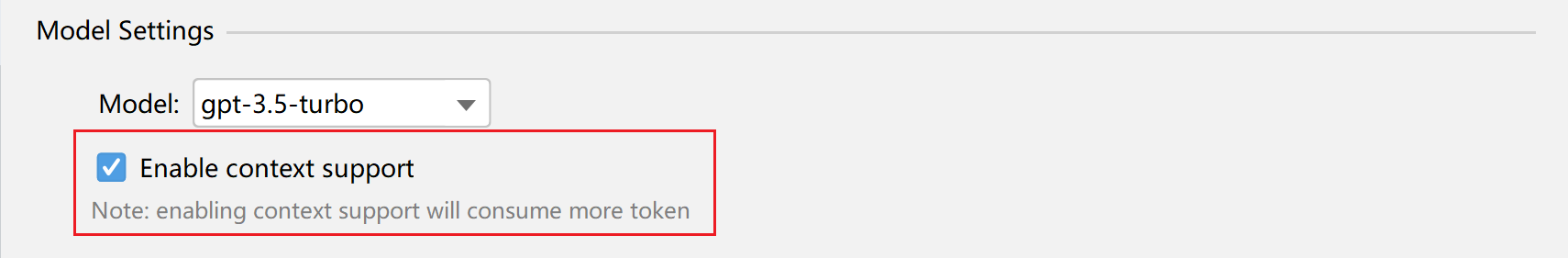

Enable contextual support¶

Caution

This feature is off by default, if you want to use it, please check Enable Context Support here.

Enabling contextual support will consume more Tokens afterwards.

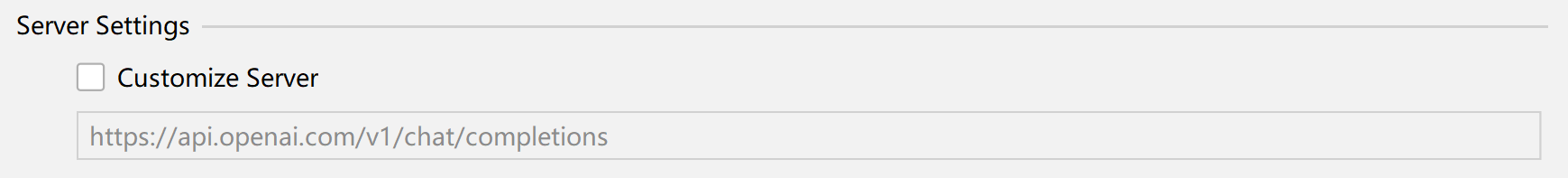

Server Settings¶

If you have other sources, you can have them configured here.

It is worth noting that

The parameter structure of the custom source request and the returned data structure need to be consistent with the official website, otherwise there may be parsing errors.

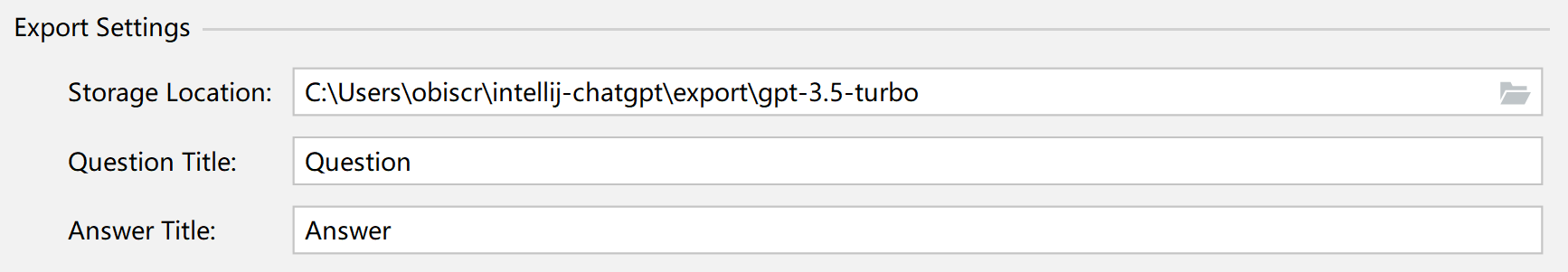

Export settings¶

The exported file is in Markdown format by default.

- Storage Location: Indicates the default storage location for the exported dialogs, and the title of the question and answer.

- Question: The title of the question in the export file

- Answer: the title of the answer in the export file

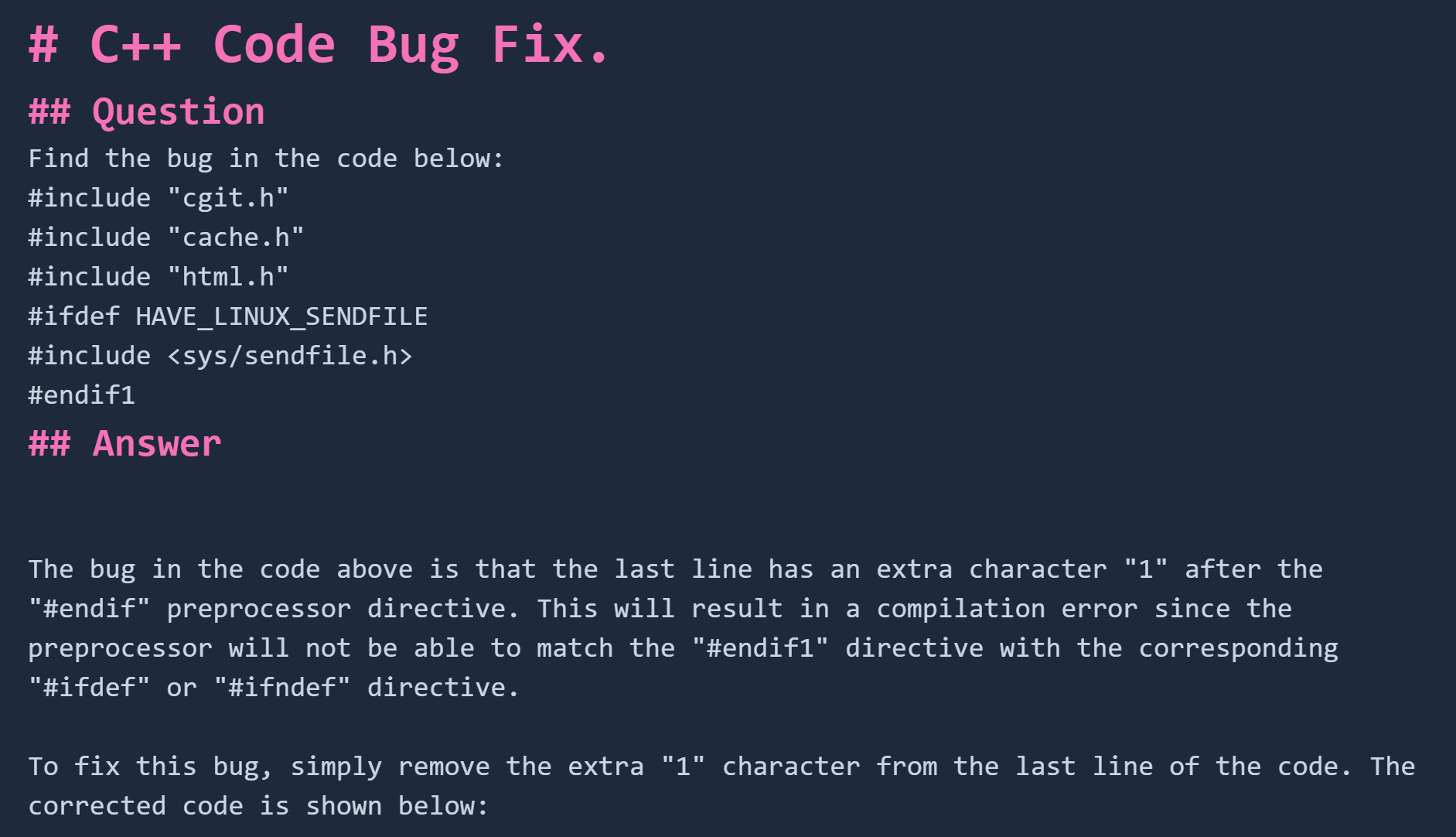

For reference, here is an example: